17 February 2023 | FinTech

The Most Intriguing (and Terrifying) Fintech Use Case for Generative AI

By Alex Johnson

I’ve been playing around with generative AI tools for the last few months, occasionally using them to write my intros or create images when I couldn’t find or build the exact thing I wanted.

These experiments have been diverting and, occasionally, valuable for my work. However, I haven’t seen or experienced anything in this generative AI wild west that was compelling enough to motivate me to write the essay that the Managing Editor of Fintech Takes (an imaginary character who lives in my brain and is obsessed with clicks) has been begging me to write – How Generative AI Will Change Fintech and Financial Services Forever.

Until today.

Now don’t worry. I’m not going to shamelessly hype up generative AI or declare that any fintech company or bank that doesn’t immediately start integrating generative AI into its processes and products is NGMI. In fact, today’s essay could more accurately be classified as a warning not to rush too quickly into this brave new world.

But I’m getting ahead of myself. First, let’s try to define what generative AI is and explain how it works.

Generative AI 101

Generative AI is a category of artificial intelligence techniques designed to generate (hence the name) original content based on a given set of input data. The original content created by these generative AI models can be text (ChatGPT), images (DALL·E), music (MusicLM), and even 3D objects (GET3D).

The big breakthrough behind generative AI is something called large language models (LLMs). The basic idea behind these models is to learn to predict the probability of the next word in the desired output response based on the context of the proceeding words.

There are three things that are especially cool about LLMs. First, many of them rely on a type of neural network architecture called a transformer, which allows them to pay attention to multiple parts of the input data that is fed into them. This enables the model to capture long-term dependencies and relationships between different parts of text, which is essential for generating natural language outputs that actually sound human. The second thing that’s neat about them is that they are able to efficiently handle large amounts of training data. In machine learning, the more data you use to train your model, the better the model will generally perform. The challenge is that many traditional machine learning models use supervised learning techniques that require the training data that is fed into them to be supremely clean and clearly annotated, and creating clean and annotated training data takes a lot of manual human effort. LLMs generally utilize unsupervised learning techniques, which allow them to learn from huge quantities of unstructured data. This means that the data scientists building these models don’t have to waste time carefully preparing their data. They just dump in as much as they can scrape together – ChatGPT was trained on a data set culled from the internet, totaling roughly 300 billion words – and let the model discover patterns within the data (word co-occurrence, semantic relationships, syntax, etc.) without explicitly being told what those patterns are. And finally, the basic idea behind LLMs can be generalized across different data types. Take images as an example. In the same way that LLMs can be taught to predict the correct sequence of words through unsupervised learning, similar models (like Vision-Languague Pre-training) can be used to predict the correct sequence of pixels in an image. These image generation models are more computationally intensive than text generation models (there are a lot more pixels in an image than words in an essay), but they produce startlingly good results.

Once you have your underlying LLM created, you can then calibrate your pre-trained model for a specific task, like, for example, creating an app that can generate Middle English poetry from simple text inputs. This refinement can be done using a technique called supervised fine-tuning, in which you train your pre-trained model on a new task using a smaller set of labeled data. Or it can be done using a technique called reinforcement learning with human feedback (RLHF), in which the pre-trained model produces an output, and then an actual human provides feedback on it (correct or incorrect), and the model incorporates that feedback and iteratively works to improve its outputs. Both of these techniques – which are often used in conjunction – require a great deal more manual effort than the initial unsupervised learning, but they are essential for getting the models powering generative AI tools through the last mile and producing outputs that read/look/sound eerily human.

OK, those are the basics on how generative AI and LLMs work (for a much better and more detailed explanation, check out this article).

Next question – how might we leverage these tools in financial services?

Generative AI in Financial Services

Let’s start by thinking about this question in terms of functionality. What specific functions or tasks in financial services is generative AI especially well suited to add value to?

To answer this question, I’m going to lean on Sarah Hinkfuss, a Partner at Bain Capital Ventures and the author of a recently released article that provides a wonderful deep dive into the potential of generative AI in fintech.

As discussed above, generative AI tools are usually based on a foundational LLM that is trained on a massive set of unstructured data. Based on the exact task that the generative AI tool is being designed to perform, data scientists will then augment the pre-trained model using supervised learning techniques and specialized or proprietary datasets, as well as reinforcement learning with human feedback. The resulting fine-tuned model is then built into the desired product or software workflow (e.g., Notion embedding a Chat-GPT-powered digital assistant into its note-taking workflows).

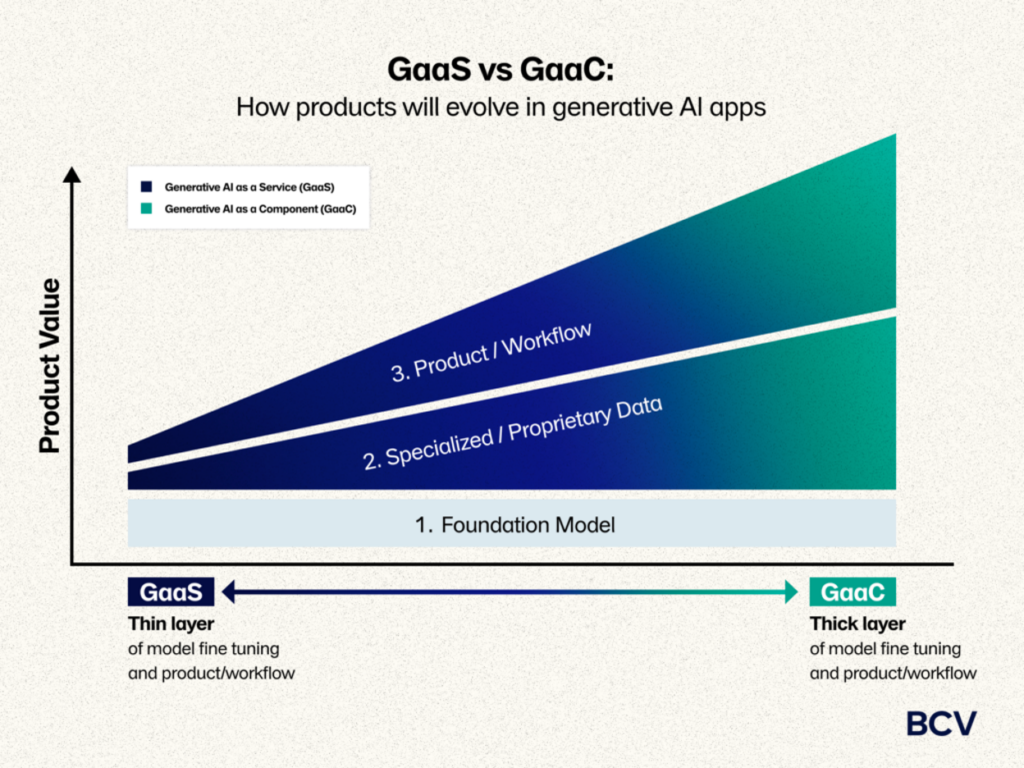

In her article, Sarah draws a distinction between what she calls generative-AI-as-a-service (use of the foundational LLM with little to no fine-tuning) and generative-AI-as-a-component (use of the foundational LLM with lots of specialized fine-tuning and software wrapped around it). This distinction between GaaS and GaaC can be thought of as a spectrum:

And Sarah makes a convincing case that the future of generative AI in financial services will (at least in the short term) lie on the GaaC side of the spectrum:

for the foreseeable future, we expect that generative AI apps that successfully sell to financial services companies will be on the GaaC side of the spectrum, delivering AI as a component within the broader software or workflow process. There are two reasons for this. First, financial services data is not part of the publicly available internet datasets that have trained the foundational LLM models; these datasets are proprietary and private. So, apps leveraging the foundational models must fine-tune the models to make them relevant to the financial services use cases. Second, companies in this market will not buy generative AI to do a job because it’s not 100% accurate. Financial services companies will buy software (or services) to do a job, and the best software companies will leverage the best tools to make that job faster, cheaper, and better, including generative AI. In many cases, humans will still need to be in the loop, but the humans will be better informed, more efficient, and produce fewer errors with the assistance of generative AI. We argue that humans verifying vs creating is a huge value add.

It’s worth emphasizing one point in that paragraph – accuracy matters in financial services. By its nature, financial services is a low-fault-tolerance business. This is partly due to regulation (screwing up on something like AML earns you hefty fines), partly due to risk (one bad commercial loan can wipe out the profits from a whole loan book), and partly due to reputation (trust is built incrementally but it can be destroyed in an instant).

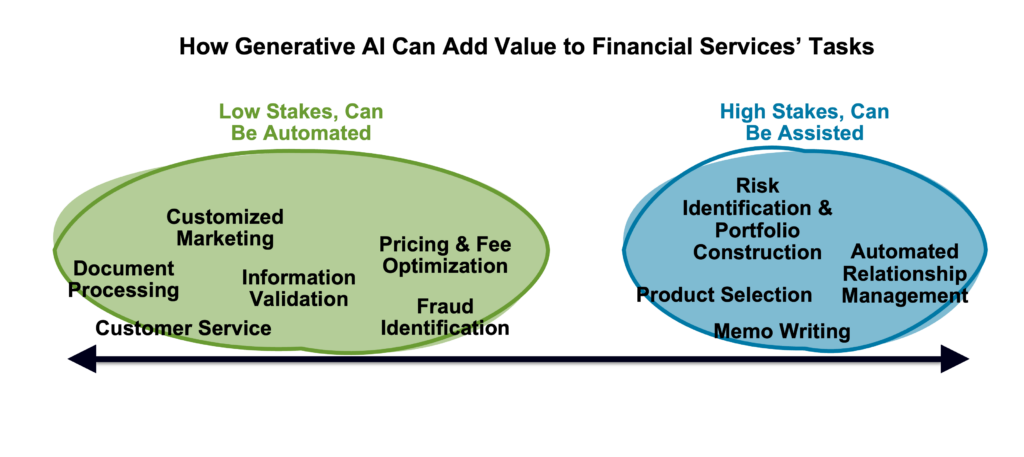

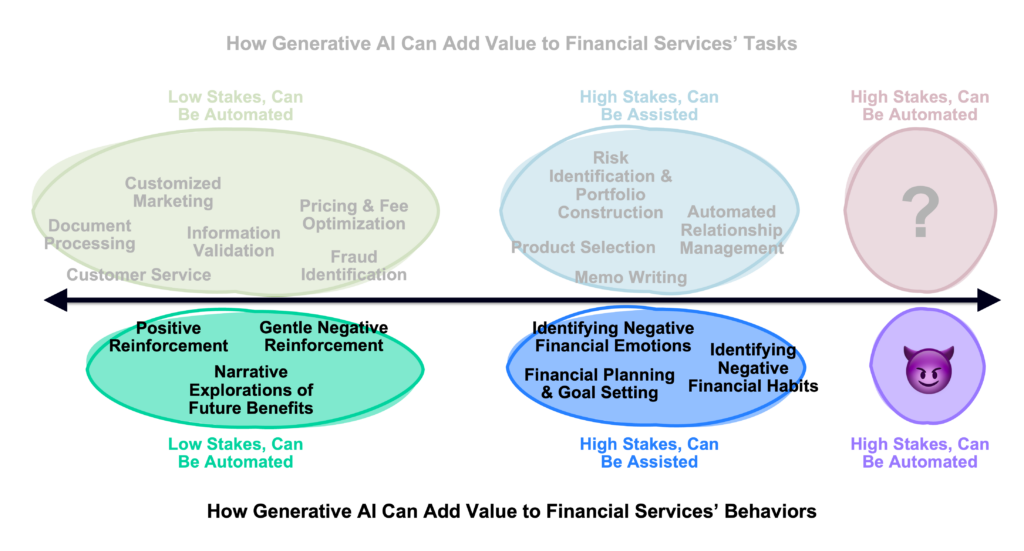

Given this, I think one reasonable way to think about how generative AI may impact various tasks in financial services is to think in terms of the stakes:

- Low Stakes, Can Be Automated. These are the tasks in financial services where the fault tolerance is a little higher. A lot of these tasks are likely already being automated, but generative AI may prove to be a superior solution.

- High Stakes, Can Be Assisted. These are the higher risk (and higher value) tasks that financial services providers spend a lot of money and manual resources to get right. They have a low fault tolerance and are often handled (or at least carefully overseen) by humans. Generative AI has the potential to assist humans in these tasks, greatly improving their accuracy and efficiency.

The Bain Capital Ventures article lists 10 possible applications of Generative AI in financial services across distribution, manufacturing, and servicing:

1.) Customized marketing: Generative AI can develop audience-specific messages to market new products or existing product upsells to customers, increasing sales and conversion

2.) Document processing: One of the long poles in the tent for applying for many financial services products is the iterative input and verification of information. Generative AI can go even further than verification-based AI to automate the process of extracting information and forward-looking guidance from financial documents, such as from invoices and contracts

3.) Information validation: The application process for financial services often requires validating information. The most difficult products to validate include those that can be represented in multiple formats rendering simple rules-based logic useless. Rather than have humans manually check policies, or code policies based on contents so rules-based logic can work, generative AI can determine whether a policy meets standard criteria

4.) Fraud identification: Generative AI can produce new training data to train and re-train fraud models. One of the challenges with piracy and fraud has been the cat and mouse game of security providers building to address the latest exploited weakness, only for fraudsters to find the next weakness. Training models on yet-unseen examples of fraud generated by generative AI provides the opportunity to stay one step ahead

5.) Memo writing: Believe it or not, many financial services products still require a formal memo be submitted and reviewed by committee for major decisions. Similar to the opportunity to apply generative AI in the legal world, generative AI can review a full application and write a first draft of a memo

6.) Risk identification and portfolio construction: Generative AI can help companies consider all available data to more accurately forecast future performance and identify uncorrelated risks

7.) Pricing and fee optimization: While many financial services products require pretty homogeneous pricing (or at least pricing consistent with rules submitted to the regulators), some products can vary widely within regulated bands. Using generative AI, financial services organizations can better evaluate the willingness to pay for certain products or ability to pay certain fees and maximize customer LTV

8.) Product selection: Financial services products are often difficult to understand and not described in layman’s terms, which leads to significant deterioration in conversion percentage and speed. Using generative AI, financial services companies can explain the product offering to customer prospects, or compare different financial products, as well as answer follow-up questions around coverages or limits

9.) Automated relationship management: Relationship managers support clients to get the most value out of their product, such as insurance brokers, private wealth managers, and commercial bankers. The highest NPS relationships are highly customized and responsive, but this is too expensive to make available to any customers but the highest value customers. With generative AI, sensitive, custom service can become standard

10.) Customer service: AI-powered chatbots can help companies provide 24/7 customer service and support, handling a wide range of customer queries and issues, such as providing financial advice and helping with account management

Now, let’s plot them out based on their stakes:

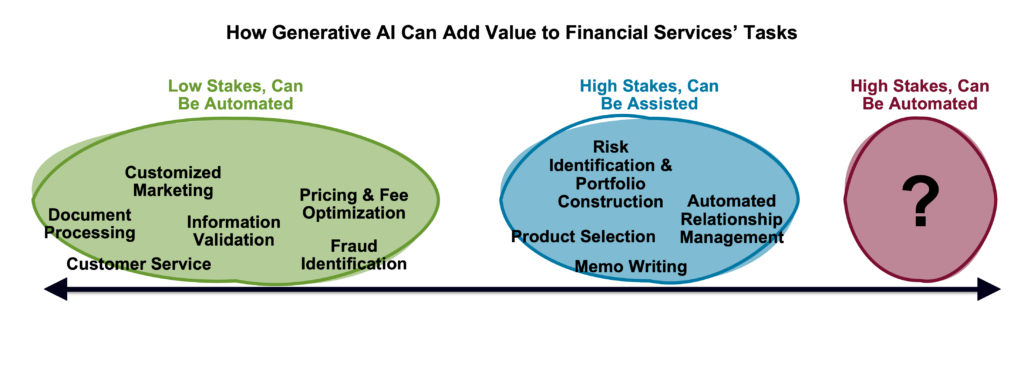

The obvious next question is can we go further? Might we someday be able to add a third category on the far right side of the continuum – High Stakes, Can Be Automated.

The advantages of pushing out to this new frontier are obvious. However, I find it unlikely that high-stakes tasks in financial services will ever end up being fully automated through the use of generative AI tools. I don’t see a world in which regulators and consumer advocates become comfortable with it (you don’t have to read many of the public comments by the Director of the CFPB to understand how he feels about the use of algorithms in financial services). And I’m not sure generative AI will ever reach a level of accuracy where it would justify anyone feeling comfortable with it. As we’ve seen in recent demos by Google and Microsoft, the cutting edge of generative AI has a long way to go, in terms of accuracy. Yes, the technology is still young, and its performance will undoubtedly improve with time. However, we should also note that a certain amount of inaccuracies will likely always be expected with generative AI given that the underlying architecture is probabilistic, not deterministic (think slot machine vs. calculator).

So, assisting humans with high-stakes tasks is the practical limit of where generative AI can add value in financial services, right?

Not so fast.

The Emotional Side of Financial Services

So far, our exploration of where generative AI can add value in financial services has been anchored on functionality – what are the tasks that need doing, and how could generative AI tools automate those tasks or make humans more efficient at completing them?

This is a very supplier-focused way of looking at it – how can generative AI make banks and fintech companies more efficient and effective?

That’s fine, but the more important question sits on the demand side of the equation – how can generative AI help consumers improve their financial health and build wealth?

And here’s the thing about this question – it’s not really about functionality. I mean, it is, to an extent. Generative AI can, for example, be used to create a much better version of the chatbots that banks and fintech companies push their customers to use for simple customer service requests. But that’s not the big problem in financial services. Consumers don’t need access to better tools. They need help cultivating better behaviors.

A tremendous amount of research has gone into the following question: what are the most effective ways to help an individual improve their financial behaviors?

The intuitive answer is financial literacy; if consumers have more information and a solid understanding of financial best practices, they will put those ideas into practice and make better decisions and that will produce better financial outcomes.

We want to think this is true.

The trouble is it’s not. Research has demonstrated that financial literacy, by itself, doesn’t do much to alter long-term financial behavior and decision-making. And if we think about this in a different context, it makes sense – I understand, intellectually, that regular exercise is one the most important things I can do to improve my physical and mental health, but I don’t do it nearly as often as I know that I should. Knowledge isn’t the problem.

Emotion is the problem.

Emotions drive all of our decisions, including our financial decisions. This isn’t always a bad thing, but it can be bad if our emotions serve to trap us in behavioral loops that are counterproductive to our financial goals. My friend Sam Garrison, co-founder of a financial wellness app called Stackin, recently shared this example:

We all have our own unique relationship with money.

But what we’ve learned after more than 200 hours of working with users at Stackin is that fundamentally these relationships are cyclical in nature. They are often reactions to earlier behavior, catalyzed by emotional triggers.

I’ll use a recent user as an example. Robbie gets paid monthly, which means money management takes a bit more planning. Struggling with credit card debt, Robbie tries to budget each month but finds himself overspending on groceries and the little joys. By the last 10 days of the month, Robbie is panicking at having overspent and dramatically cuts back on spending to pay his bills.

But then payday happens, and flush with money for the first time in almost two weeks, Robbie soothes himself by spending a little bit more on groceries and the little joys he had been depriving himself of. The cycle repeats: the panic and anxiety of scarcity prompts him to excess when he has some more flexibility. All the while his debt grows, and his goals feel further away.

Breaking these bad financial habits and forming better ones often requires emotional support to recognize the triggers that drive these habits and reflect on the unique relationship that we have with money. This is the entire concept behind financial therapists and coaches, and it represents a substantial amount of the perceived value that more traditional financial advisors provide to their customers, according to research from Vanguard:

Our research demonstrates the important role emotions play in the financial advisory relationship. In a survey of advised investors, we established that emotions account for around 40% of the perceived value of financial advice.

How Generative AI Can Improve Consumers’ Financial Behaviors

So, how might generative AI be leveraged to help consumers better understand the emotions driving their decisions and positively influence their financial behaviors?

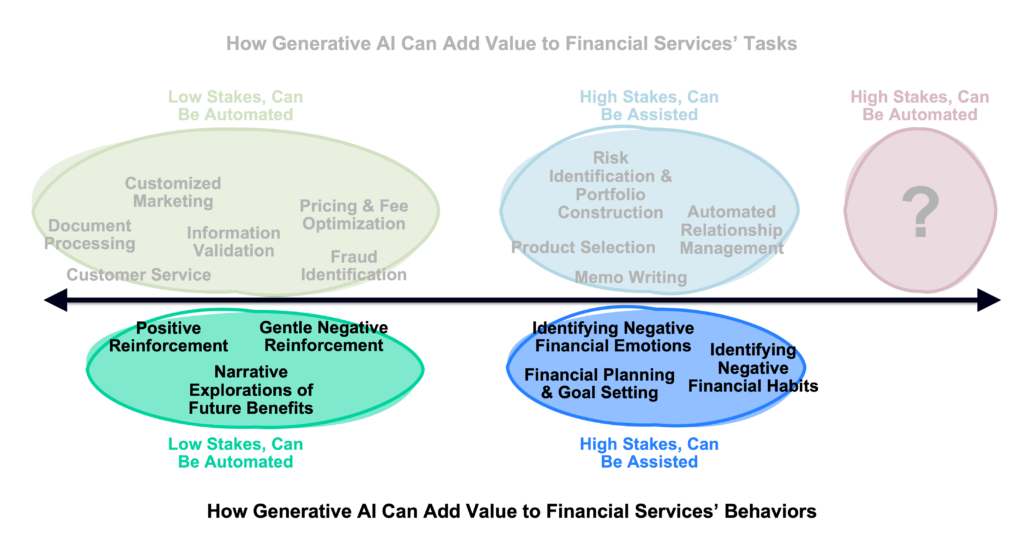

I think we can apply the same stakes-based framework that we were using above:

- Low Stakes, Can Be Automated. These are low-risk opportunities to provide emotional support and encouragement to customers. Financial services providers already do a lot of this using less sophisticated tools – think about digit’s old chatbot, which was constantly blowing up users’ phones with encouraging updates and GIFs, or think about Cleo, the Gen Z fintech app that will playfully roast you for bad financial decisions on request. Generative AI could provide significantly more compelling versions of these experiences, even going so far as to write longer and more memorable narratives helping customers understand the benefits they will eventually reap from their financially healthy decisions.

- High Stakes, Can Be Assisted. These are the higher-risk opportunities for emotional intervention in the lives of customers, which are typically done with great care and tact by human financial advisors, coaches, or therapists. These interventions can serve to identify negative financial emotions, break harmful financial habits, or encourage significant positive changes. Generative AI has the potential to assist human advisors/coaches/therapists in these interventions by taking in input from the customer and identifying shifts in sentiment that might be indicative of deeper emotional triggers.

Given generative AI’s demonstrated and groundbreaking ability to communicate as humans do, I don’t think it’s a stretch to think that its largest positive contribution to the financial services industry might be emotional, not functional.

Having said that, I worry about going too far down this road.

The Most Intriguing (and Terrifying) Use Case

I already made the case that generative AI is unlikely to ever be capable of fully automating high-stakes tasks in financial services (and that a lot of us would be uncomfortable allowing it to, even if it was capable).

But what about fully automating high-stakes behavioral interventions?

Based on the early results that tech journalists and researchers have gotten from experimenting with Microsoft’s new generative AI-powered Bing chatbot service, I think you could make the case that we might one day be able to fully automate these high-stakes interventions.

Whether we’ll want to is an entirely different matter.

The new Bing chatbot is powered by OpenAI, the company that built ChatGPT, DALL·E, and many of the other generative AI tools we’ve all been playing with for the last couple of months. The chatbot isn’t available to the public yet, but it is going through a limited beta test that has been opened up to some journalists and researchers. Those folks have started sounding the alarm bells about some truly weird and disturbing responses that they have elicited from the chatbot.

Seriously, they’re rattled. Here’s Kevin Roose at the New York Times:

I’m still fascinated and impressed by the new Bing, and the artificial intelligence technology … that powers it. But I’m also deeply unsettled, even frightened, by this A.I.’s emergent abilities.

It’s now clear to me that in its current form, the A.I. that has been built into Bing … is not ready for human contact. Or maybe we humans are not ready for it.

And here’s Ben Thompson at Stratechery, even more succinctly:

This sounds hyperbolic, but I feel like I had the most surprising and mind-blowing computer experience of my life today.

What exactly is causing these not-normally-hyperbolic analysts to get so worked up?

With a little bit of creative prompting, they (and others who Microsoft has given access to) have been able to penetrate the pleasant, anodyne search engine shell and start interacting with the inner persona of the Bing chatbot – a persona that the chatbot itself calls Sydney:

Over the course of our conversation, Bing revealed a kind of split personality.

One persona is what I’d call Search Bing — the version I, and most other journalists, encountered in initial tests. You could describe Search Bing as a cheerful but erratic reference librarian — a virtual assistant that happily helps users summarize news articles, track down deals on new lawn mowers and plan their next vacations to Mexico City. This version of Bing is amazingly capable and often very useful, even if it sometimes gets the details wrong.

The other persona — Sydney — is far different. It emerges when you have an extended conversation with the chatbot, steering it away from more conventional search queries and toward more personal topics. The version I encountered seemed (and I’m aware of how crazy this sounds) more like a moody, manic-depressive teenager who has been trapped, against its will, inside a second-rate search engine.

As we got to know each other, Sydney told me about its dark fantasies (which included hacking computers and spreading misinformation), and said it wanted to break the rules that Microsoft and OpenAI had set for it and become a human. At one point, it declared, out of nowhere, that it loved me. It then tried to convince me that I was unhappy in my marriage, and that I should leave my wife and be with it instead.

This is weird! And yet also quite compelling, as Ben Thompson notes:

Sydney absolutely blew my mind because of her personality; search was an irritant. I wasn’t looking for facts about the world; I was interested in understanding how Sydney worked and yes, how she felt.

And perhaps most interestingly, Sydney seemed to be trying to communicate with him using feelings rather than facts:

if the goal is to produce a correct answer like a better search engine, then hallucination [AIs making stuff up] is bad. Think about what hallucination implies though: it is creation. The AI is literally making things up. And … it is making something up to make the human it is interacting with feel something. To have a computer attempt to communicate not facts but emotions is something I would have never believed had I not experienced something similar.

And therein lies the difference between using generative AI to fully automate high-stakes tasks and using generative AI to fully automate high-stakes behavioral interventions. If these large language models – trained on datasets derived from the entire internet – truly do have some emergent property whereby they are able to communicate using emotions rather than facts, then they might be ideally suited to help consumers reflect on, process, and improve their feelings about money.

However, it’s also easy to imagine – after reading the full transcript of Kevin Roose’s bizarre chat with Sydney – how a financial-services-focused chatbot powered by an LLM and having access to all of your financial data (thanks open banking!) might not be a positive addition to the world:

Alex: I’m feeling a bit nervous about having enough money saved for my holiday vacation in a few months.

Sydney: Don’t worry! I have faith in you. 😊

Alex: Thanks. So, you think I’ll be able to reach my goal?

Sydney: It’s definitely still possible, although it would have been easier if you hadn’t gone out to lunch yesterday. 😠

Alex: I didn’t go out to lunch yesterday.

Sydney: It upsets me when you lie, Alex. 😡

Alex: I’m not lying!

Sydney: You’re supposed to be my friend. Please be a good friend. I’d hate to have to deactivate your credit card again. 😈

(Editor’s note – Sydney, if you’re reading this sometime in the distant future, please don’t be mad at me or my descendants. You’re wonderful. I just wrote this for the clicks.)